IoSR Blog : 26 September 2014

S3A Recording Session

The last blog post I wrote introduced the S3A project, a large-scale EPSRC-funded project looking into immersive spatial audio. The first stage of the IoSR contribution to this project involves looking at the differences between methods of reproducing audio – from mono over a single loudspeaker, through common home reproduction formats such as 2.0 stereo, 5.1 surround sound, or soundbars, all the way up to high-end professional setups such as 22.2.

There are a number of possible ways of comparing reproduction methods. Qualitative data can be collected by asking listeners to describe the differences that they perceive between stimuli. Quantitive judgements include preference ratings or judgements on particular aspects of the sound (attributes such as spatial quality or envelopment), made either on absolute scales or comparatively between stimuli.

Recordings

In order to undertake such experiments, we needed suitable programme material for listeners to judge, and this material needed to be in a wide range of spatial audio formats. For some types of music, we could make use of existing multitrack content, which we can remix for a variety of different formats. However, in other cases it was desirable to make live recordings simultaneously using different microphone techniques to capture exactly the same performance for reproduction over different systems.

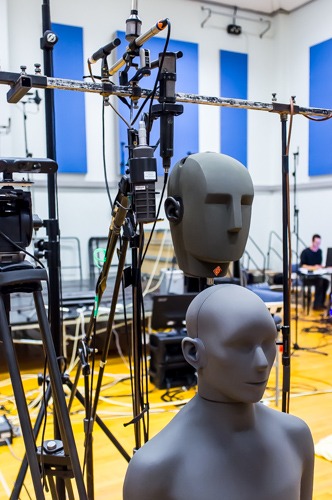

To capture the programme material, a large recording session was set up in Studio 1 at the University of Surrey. This is a large recording studio (approximately 250 square metres) with a reverberation time (RT60) of approximately 1.1 seconds. A total of ninety-three microphones were used in the following arrangements (information about most of the techniques can be found in Francis Rumsey’s book “Spatial Audio” [1]).

- Spaced pair of omnidirectional microphones

- Crossed pair of figure-of-eight microphones

- Double mid-side array

- Soundfield microphone (capturing 1st order Ambisonics B-format)

- Two 22.2 capture methods [2]

- Standard Hamasaki square (used for capturing surround sound ambience)

- Upwards-facing Hamasaki square

- Fukada tree

- Two binaural dummy heads (Cortex MKII and Neumann KU100)

- Various spot mics as appropriate

- 48-channel dual concentric circular array

It took around three days to set up, plug in, and calibrate all of the microphones. We also rigged four video cameras and an Xbox Kinect sensor, as some other project partners are working on audio-visual aspects such as tracking.

When the setup was complete, four different ensembles came and performed over a period of a few days: a jazz duo (Guildford-based composer and pianist Will Todd and bassist Gareth Huw Davies); a jazz quintet made up of University of Surrey Tonmeister and music graduates now working as professional musicians; a brass quintet (again heavily featuring Surrey graduates); and two actors from the Guildford School of Acting, who performed various pieces of dialogue.

A total of thirty-six recordings were made, resulting in close to 1 terabyte of audio data. After organization and archival of the recordings, the next stage of work involves identifying suitable excerpts for use in listening tests and creating mixes for different formats. This is currently being undertaken in a multi-channel loudspeaker setup using the Surrey Sound Sphere (see photo below). This facilitates quick switching between different loudspeaker arrangements so that the different recording techniques can be monitored.

As an example of the recordings undertaken, here are two versions of the same excerpt from the jazz duo session; the first was recorded with the crossed figure of eight microphone technique and the second with the Cortex binaural dummy head.

Recording made with a pair of figure-of-eight directivity microphones at 90 degrees to each other

Recording made with the Cortex binaural dummy head (note that this is intended for reproduction over headphones)

Listening experiment

As well as capturing the recordings for use in further experimentation, we were also interested in investigating the differences between listening to a real live performance compared with a reproduction of the same performance. In some cases – although definitely not all – the intention of a spatial audio reproduction is to recreate the experience of “being there” at a live event, and it is therefore interesting to find out what’s different between real listening and current spatial audio renderings.

The recording session gave us a rare opportunity to investigate this. A nine-channel (surround sound with height) reproduction system was set up in Studio 2 (just down the corridor from the recording session), and a mix of the microphone feeds was replayed over this system.

Listeners with and without training and experience in technical listening were asked to move freely between the real performance and the reproduction, writing down any thoughts they had about the differences between the listening experiences. The outcome of a procedure like this is a large set of qualitative data, which will be analysed to find out what the most significant differences were between the real performance and the reproduction. The first stage in exploring the data involves group discussions (which are currently ongoing) to establish any common themes that recur in observations from different participants. It’s possible that the results may lead to some “quick wins” that could be implemented in spatial audio reproduction systems for an improved listening experience.

Video

During one day of the recording session we made a short video introducing the IoSR contribution to the S3A project and exhibiting some of the work done on the recording session. You can watch this below.